Yarn 介绍

配置

在所有节点上修改 mapred-site.xml 和 yarn-site.xml 配置文件

配置 mapred-site.xml

<configuration>

<!-- 设置执行 MapReduce Job 框架,比如 local, classic 或 yarn -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

配置 yarn-site.xml

<configuration>

<!-- 配置成 mapreduce_shuffle 才能运行 map reduce 程序 -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!-- resourcemanager 地址 -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>192.168.56.101</value>

</property>

</configuration>

更多参数参考:https://hadoop.apache.org/docs/stable/hadoop-yarn/hadoop-yarn-common/yarn-default.xml

登录 192.168.56.101 节点启动 ResourceManager

$ ./sbin/yarn-daemon.sh start resourcemanager

starting resourcemanager, logging to /home/hadoop/hadoop-2.8.1/logs/yarn-root-resourcemanager-vm1.out

登录 192.168.56.107 ~ 109 节点启动 NodeManager

$ ./sbin/yarn-daemon.sh start nodemanager

starting nodemanager, logging to /home/hadoop/hadoop-2.8.1/logs/yarn-root-nodemanager-vm7.out

配置启动 HistoryServer

登录 192.168.56.101,修改 mapred-site.xml

<configuration>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>192.168.56.101:19888</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>192.168.56.101:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.intermediate-done-dir</name>

<value>/mr-history/tmp</value>

</property>

<property>

<name>mapreduce.jobhistory.done-dir</name>

<value>/mr-history/done</value>

</property>

</configuration>

启动

$ ./sbin/mr-jobhistory-daemon.sh start historyserver

starting historyserver, logging to /home/hadoop/hadoop-2.8.1/logs/mapred-root-historyserver-vm1.out

$ ./bin/hdfs dfs -ls /mr-history

Found 2 items

drwxrwx--- - root supergroup 0 2017-10-21 19:38 /mr-history/done

drwxrwxrwt - root supergroup 0 2017-10-21 19:38 /mr-history/tmp

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.8.1</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.8.1</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-common</artifactId>

<version>2.8.1</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-core</artifactId>

<version>2.8.1</version>

</dependency>

</dependencies>

package mrtest;

import java.io.File;

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class BookWordCounter {

public static class WordCounterMapper extends Mapper<Object, Text, Text, IntWritable> {

// Map 阶段阶输出值

private final static IntWritable outputValue = new IntWritable(1);

// Map 阶段阶输出键,即每一个单词

private Text outputKey = new Text();

@Override

protected void map(Object key, Text value, Context context) throws IOException, InterruptedException {

StringTokenizer tokenizer = new StringTokenizer(value.toString());

while (tokenizer.hasMoreTokens()) {

String eachWord = tokenizer.nextToken();

// 清除标点符号和数字

eachWord = eachWord.replaceAll("[\\pP\\p{Punct}0-9]", "").trim();

outputKey.set(eachWord);

context.write(outputKey, outputValue);

}

}

}

public static class WordCounterReducer extends Reducer<Text, IntWritable, Text, IntWritable> {

public void reduce(Text key, Iterable<IntWritable> values, Context context)

throws IOException, InterruptedException {

int sum = 0;

for (IntWritable str : values) {

sum += str.get();

}

context.write(key, new IntWritable(sum));

}

}

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

// 创建作业实例

Job job = Job.getInstance(conf, "BookWordCounter");

job.setJarByClass(BookWordCounter.class);

job.setMapperClass(WordCounterMapper.class);

job.setReducerClass(WordCounterReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

// 如果是本地调试,在这里设置本地文件路径,如果在 hadoop 集群中运行,这里设置 hdfs 中的文件路径

// 指定输入文件

FileInputFormat.setInputPaths(job, new Path(args[0]));

// 设置输出文件

FileOutputFormat.setOutputPath(job, new Path(args[1]));

// //开始运行

boolean r = job.waitForCompletion(true);

System.out.println(r);

}

$ mvn package

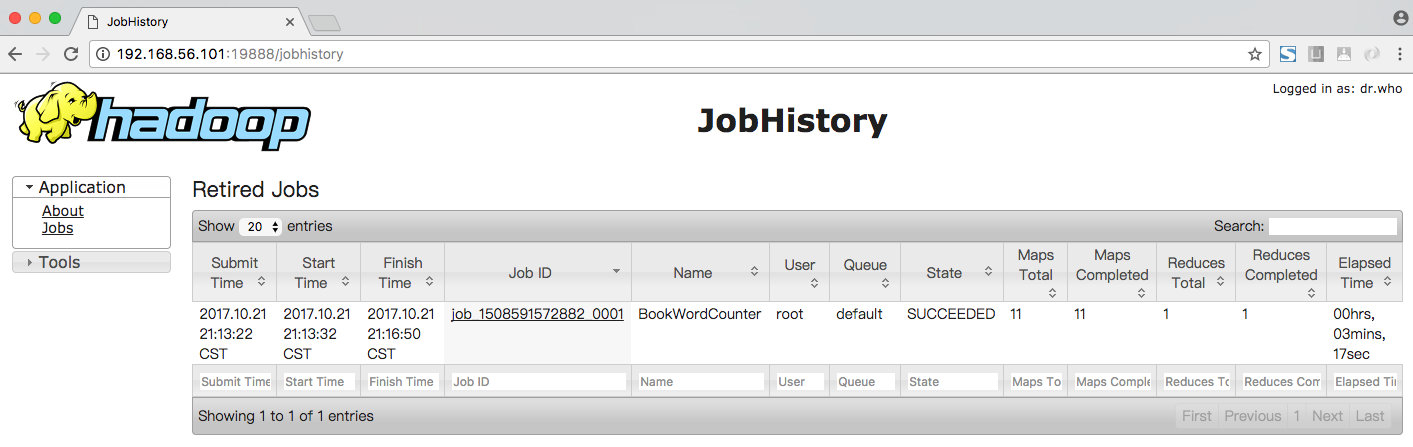

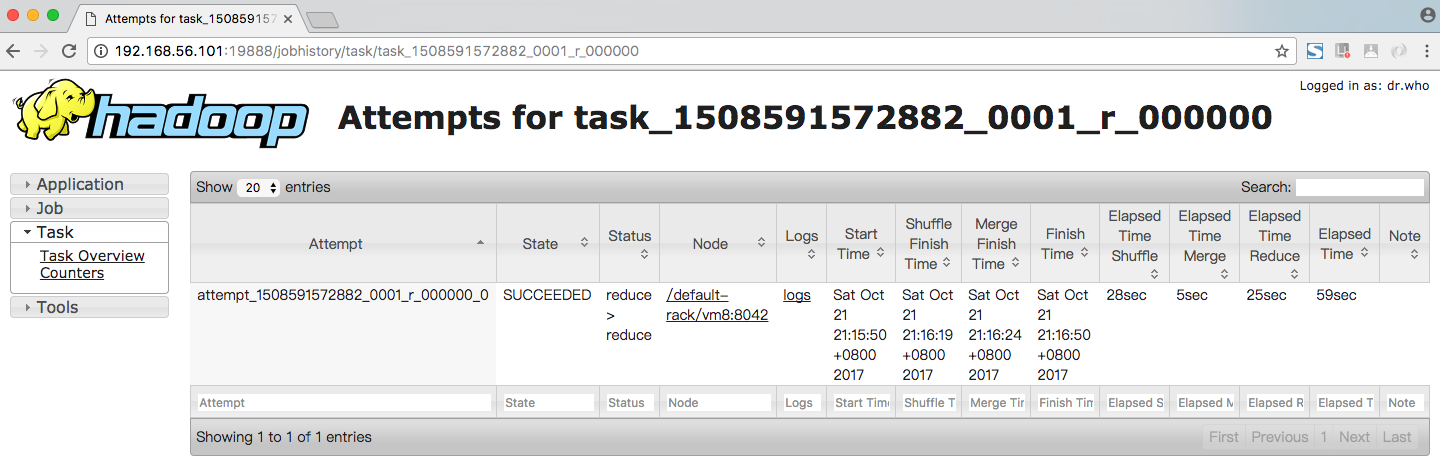

$ ./bin/yarn jar /mnt/vm_share/mrtest-0.0.1-SNAPSHOT.jar mrtest.BookWordCounter /some_book_en_for_mr.txt /some_book_en_for_mr_result

17/10/21 21:13:20 INFO client.RMProxy: Connecting to ResourceManager at /192.168.56.101:8032

17/10/21 21:13:21 WARN mapreduce.JobResourceUploader: Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

17/10/21 21:13:21 INFO input.FileInputFormat: Total input files to process : 1

17/10/21 21:13:21 INFO mapreduce.JobSubmitter: number of splits:11

17/10/21 21:13:21 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1508591572882_0001

17/10/21 21:13:22 INFO impl.YarnClientImpl: Submitted application application_1508591572882_0001

17/10/21 21:13:22 INFO mapreduce.Job: The url to track the job: http://vm1:8088/proxy/application_1508591572882_0001/

17/10/21 21:13:22 INFO mapreduce.Job: Running job: job_1508591572882_0001

17/10/21 21:13:33 INFO mapreduce.Job: Job job_1508591572882_0001 running in uber mode : false

17/10/21 21:13:33 INFO mapreduce.Job: map 0% reduce 0%

17/10/21 21:14:13 INFO mapreduce.Job: map 1% reduce 0%

17/10/21 21:14:14 INFO mapreduce.Job: map 2% reduce 0%

17/10/21 21:14:16 INFO mapreduce.Job: map 3% reduce 0%

17/10/21 21:14:17 INFO mapreduce.Job: map 4% reduce 0%

17/10/21 21:14:20 INFO mapreduce.Job: map 5% reduce 0%

17/10/21 21:14:21 INFO mapreduce.Job: map 6% reduce 0%

17/10/21 21:14:23 INFO mapreduce.Job: map 8% reduce 0%

17/10/21 21:14:24 INFO mapreduce.Job: map 9% reduce 0%

17/10/21 21:14:26 INFO mapreduce.Job: map 10% reduce 0%

17/10/21 21:14:28 INFO mapreduce.Job: map 11% reduce 0%

17/10/21 21:14:30 INFO mapreduce.Job: map 16% reduce 0%

17/10/21 21:14:32 INFO mapreduce.Job: map 17% reduce 0%

17/10/21 21:14:33 INFO mapreduce.Job: map 18% reduce 0%

17/10/21 21:14:34 INFO mapreduce.Job: map 25% reduce 0%

17/10/21 21:14:36 INFO mapreduce.Job: map 26% reduce 0%

17/10/21 21:14:38 INFO mapreduce.Job: map 28% reduce 0%

17/10/21 21:14:40 INFO mapreduce.Job: map 31% reduce 0%

17/10/21 21:14:42 INFO mapreduce.Job: map 35% reduce 0%

17/10/21 21:14:45 INFO mapreduce.Job: map 36% reduce 0%

17/10/21 21:14:47 INFO mapreduce.Job: map 37% reduce 0%

17/10/21 21:14:48 INFO mapreduce.Job: map 39% reduce 0%

17/10/21 21:14:49 INFO mapreduce.Job: map 40% reduce 0%

17/10/21 21:14:53 INFO mapreduce.Job: map 41% reduce 0%

17/10/21 21:14:56 INFO mapreduce.Job: map 42% reduce 0%

17/10/21 21:15:00 INFO mapreduce.Job: map 43% reduce 0%

17/10/21 21:15:14 INFO mapreduce.Job: map 45% reduce 0%

17/10/21 21:15:16 INFO mapreduce.Job: map 46% reduce 0%

17/10/21 21:15:19 INFO mapreduce.Job: map 47% reduce 0%

17/10/21 21:15:20 INFO mapreduce.Job: map 49% reduce 0%

17/10/21 21:15:22 INFO mapreduce.Job: map 53% reduce 0%

17/10/21 21:15:25 INFO mapreduce.Job: map 54% reduce 0%

17/10/21 21:15:28 INFO mapreduce.Job: map 55% reduce 0%

17/10/21 21:15:34 INFO mapreduce.Job: map 59% reduce 0%

17/10/21 21:15:35 INFO mapreduce.Job: map 61% reduce 0%

17/10/21 21:15:36 INFO mapreduce.Job: map 62% reduce 0%

17/10/21 21:15:38 INFO mapreduce.Job: map 64% reduce 0%

17/10/21 21:15:40 INFO mapreduce.Job: map 69% reduce 0%

17/10/21 21:15:42 INFO mapreduce.Job: map 70% reduce 0%

17/10/21 21:15:44 INFO mapreduce.Job: map 71% reduce 0%

17/10/21 21:15:45 INFO mapreduce.Job: map 74% reduce 0%

17/10/21 21:15:47 INFO mapreduce.Job: map 77% reduce 0%

17/10/21 21:15:49 INFO mapreduce.Job: map 78% reduce 0%

17/10/21 21:15:50 INFO mapreduce.Job: map 81% reduce 0%

17/10/21 21:15:51 INFO mapreduce.Job: map 82% reduce 0%

17/10/21 21:16:05 INFO mapreduce.Job: map 85% reduce 0%

17/10/21 21:16:07 INFO mapreduce.Job: map 87% reduce 0%

17/10/21 21:16:09 INFO mapreduce.Job: map 87% reduce 15%

17/10/21 21:16:11 INFO mapreduce.Job: map 91% reduce 15%

17/10/21 21:16:12 INFO mapreduce.Job: map 93% reduce 15%

17/10/21 21:16:13 INFO mapreduce.Job: map 95% reduce 15%

17/10/21 21:16:15 INFO mapreduce.Job: map 96% reduce 15%

17/10/21 21:16:16 INFO mapreduce.Job: map 97% reduce 15%

17/10/21 21:16:17 INFO mapreduce.Job: map 99% reduce 15%

17/10/21 21:16:18 INFO mapreduce.Job: map 100% reduce 15%

17/10/21 21:16:21 INFO mapreduce.Job: map 100% reduce 38%

17/10/21 21:16:27 INFO mapreduce.Job: map 100% reduce 68%

17/10/21 21:16:34 INFO mapreduce.Job: map 100% reduce 76%

17/10/21 21:16:40 INFO mapreduce.Job: map 100% reduce 84%

17/10/21 21:16:46 INFO mapreduce.Job: map 100% reduce 93%

17/10/21 21:16:52 INFO mapreduce.Job: map 100% reduce 100%

17/10/21 21:16:52 INFO mapreduce.Job: Job job_1508591572882_0001 completed successfully

17/10/21 21:16:52 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=1641052185

FILE: Number of bytes written=2394963518

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=368971925

HDFS: Number of bytes written=341707

HDFS: Number of read operations=36

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=11

Launched reduce tasks=1

Data-local map tasks=11

Total time spent by all maps in occupied slots (ms)=1621725

Total time spent by all reduces in occupied slots (ms)=59608

Total time spent by all map tasks (ms)=1621725

Total time spent by all reduce tasks (ms)=59608

Total vcore-milliseconds taken by all map tasks=1621725

Total vcore-milliseconds taken by all reduce tasks=59608

Total megabyte-milliseconds taken by all map tasks=1660646400

Total megabyte-milliseconds taken by all reduce tasks=61038592

Map-Reduce Framework

Map input records=7343500

Map output records=67914600

Map output bytes=616451597

Map output materialized bytes=752280863

Input split bytes=1265

Combine input records=0

Combine output records=0

Reduce input groups=27344

Reduce shuffle bytes=752280863

Reduce input records=67914600

Reduce output records=27344

Spilled Records=216065635

Shuffled Maps =11

Failed Shuffles=0

Merged Map outputs=11

GC time elapsed (ms)=18825

CPU time spent (ms)=264450

Physical memory (bytes) snapshot=2256031744

Virtual memory (bytes) snapshot=24974262272

Total committed heap usage (bytes)=1519169536

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=368970660

File Output Format Counters

Bytes Written=341707

true

$ /bin/hdfs dfs -tail /some_book_en_for_mr_result/part-r-00000

00

flicker 200

flickered 600

flickerin 100

flickering 600

flicking 100

flict 100

flicted 500

flier 200

fliers 100

flies 600

flight 3300

flinch 100

fling 200

...

启动 nodemanager

$ vim ./create_book_text_for_mr.sh

#!/bin/bash

for i in {1..100}

do

cat ./some_book_en.txt >> ./some_book_en_for_mr.txt

done

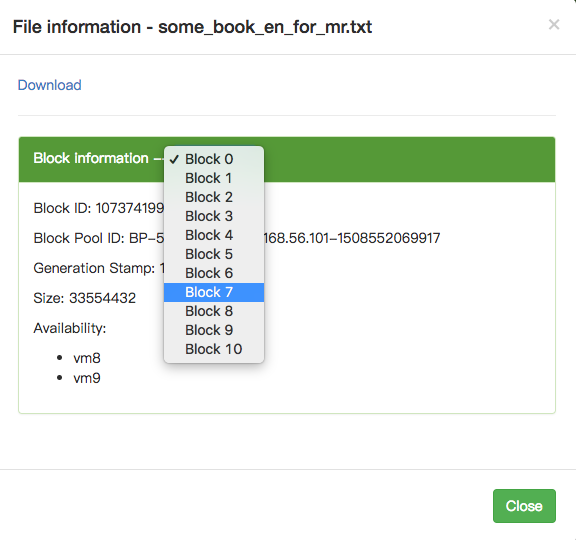

$ ./bin/hadoop fs -put /mnt/vm_share/some_book_en_for_mr.txt /

$ ./bin/hadoop fs -ls -h /some_book_en_for_mr.txt

$ ./bin/hadoop fs -ls -h /some_book_en_for_mr.txt

-rw-r--r-- 2 root supergroup 351.8 M 2017-10-21 19:08 /some_book_en_for_mr.txt

./bin/hdfs fsck /some_book_en_for_mr.txt -files -blocks -locations

Connecting to namenode via http://vm1:50070/fsck?ugi=root&files=1&blocks=1&locations=1&path=%2Fsome_book_en_for_mr.txt

FSCK started by root (auth:SIMPLE) from /192.168.56.101 for path /some_book_en_for_mr.txt at Sat Oct 21 21:23:20 CST 2017

/some_book_en_for_mr.txt 368929700 bytes, 11 block(s): OK

0. BP-525791482-192.168.56.101-1508552069917:blk_1073741993_1169 len=33554432 Live_repl=2 [DatanodeInfoWithStorage[192.168.56.109:50010,DS-27a20178-43f1-47a1-a071-6684fdbcfa69,DISK], DatanodeInfoWithStorage[192.168.56.108:50010,DS-4e6deea2-8fcb-4294-b5c4-4e4371cf5dc3,DISK]]

1. BP-525791482-192.168.56.101-1508552069917:blk_1073741994_1170 len=33554432 Live_repl=2 [DatanodeInfoWithStorage[192.168.56.109:50010,DS-27a20178-43f1-47a1-a071-6684fdbcfa69,DISK], DatanodeInfoWithStorage[192.168.56.108:50010,DS-4e6deea2-8fcb-4294-b5c4-4e4371cf5dc3,DISK]]

2. BP-525791482-192.168.56.101-1508552069917:blk_1073741995_1171 len=33554432 Live_repl=2 [DatanodeInfoWithStorage[192.168.56.109:50010,DS-27a20178-43f1-47a1-a071-6684fdbcfa69,DISK], DatanodeInfoWithStorage[192.168.56.107:50010,DS-1e7315b1-f8bd-42c3-929f-ac891c4c1464,DISK]]

3. BP-525791482-192.168.56.101-1508552069917:blk_1073741996_1172 len=33554432 Live_repl=2 [DatanodeInfoWithStorage[192.168.56.107:50010,DS-1e7315b1-f8bd-42c3-929f-ac891c4c1464,DISK], DatanodeInfoWithStorage[192.168.56.109:50010,DS-27a20178-43f1-47a1-a071-6684fdbcfa69,DISK]]

4. BP-525791482-192.168.56.101-1508552069917:blk_1073741997_1173 len=33554432 Live_repl=2 [DatanodeInfoWithStorage[192.168.56.107:50010,DS-1e7315b1-f8bd-42c3-929f-ac891c4c1464,DISK], DatanodeInfoWithStorage[192.168.56.109:50010,DS-27a20178-43f1-47a1-a071-6684fdbcfa69,DISK]]

5. BP-525791482-192.168.56.101-1508552069917:blk_1073741998_1174 len=33554432 Live_repl=2 [DatanodeInfoWithStorage[192.168.56.108:50010,DS-4e6deea2-8fcb-4294-b5c4-4e4371cf5dc3,DISK], DatanodeInfoWithStorage[192.168.56.107:50010,DS-1e7315b1-f8bd-42c3-929f-ac891c4c1464,DISK]]

6. BP-525791482-192.168.56.101-1508552069917:blk_1073741999_1175 len=33554432 Live_repl=2 [DatanodeInfoWithStorage[192.168.56.107:50010,DS-1e7315b1-f8bd-42c3-929f-ac891c4c1464,DISK], DatanodeInfoWithStorage[192.168.56.108:50010,DS-4e6deea2-8fcb-4294-b5c4-4e4371cf5dc3,DISK]]

7. BP-525791482-192.168.56.101-1508552069917:blk_1073742000_1176 len=33554432 Live_repl=2 [DatanodeInfoWithStorage[192.168.56.108:50010,DS-4e6deea2-8fcb-4294-b5c4-4e4371cf5dc3,DISK], DatanodeInfoWithStorage[192.168.56.109:50010,DS-27a20178-43f1-47a1-a071-6684fdbcfa69,DISK]]

8. BP-525791482-192.168.56.101-1508552069917:blk_1073742001_1177 len=33554432 Live_repl=2 [DatanodeInfoWithStorage[192.168.56.109:50010,DS-27a20178-43f1-47a1-a071-6684fdbcfa69,DISK], DatanodeInfoWithStorage[192.168.56.107:50010,DS-1e7315b1-f8bd-42c3-929f-ac891c4c1464,DISK]]

9. BP-525791482-192.168.56.101-1508552069917:blk_1073742002_1178 len=33554432 Live_repl=2 [DatanodeInfoWithStorage[192.168.56.109:50010,DS-27a20178-43f1-47a1-a071-6684fdbcfa69,DISK], DatanodeInfoWithStorage[192.168.56.107:50010,DS-1e7315b1-f8bd-42c3-929f-ac891c4c1464,DISK]]

10. BP-525791482-192.168.56.101-1508552069917:blk_1073742003_1179 len=33385380 Live_repl=2 [DatanodeInfoWithStorage[192.168.56.109:50010,DS-27a20178-43f1-47a1-a071-6684fdbcfa69,DISK], DatanodeInfoWithStorage[192.168.56.108:50010,DS-4e6deea2-8fcb-4294-b5c4-4e4371cf5dc3,DISK]]

Status: HEALTHY

Total size: 368929700 B

Total dirs: 0

Total files: 1

Total symlinks: 0

Total blocks (validated): 11 (avg. block size 33539063 B)

Minimally replicated blocks: 11 (100.0 %)

Over-replicated blocks: 0 (0.0 %)

Under-replicated blocks: 0 (0.0 %)

Mis-replicated blocks: 0 (0.0 %)

Default replication factor: 2

Average block replication: 2.0

Corrupt blocks: 0

Missing replicas: 0 (0.0 %)

Number of data-nodes: 3

Number of racks: 1

FSCK ended at Sat Oct 21 21:23:20 CST 2017 in 3 milliseconds

The filesystem under path '/some_book_en_for_mr.txt' is HEALTHY

./sbin/start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /home/hadoop/hadoop-2.8.1/logs/yarn-root-resourcemanager-vm1.out

192.168.56.109: starting nodemanager, logging to /home/hadoop/hadoop-2.8.1/logs/yarn-root-nodemanager-vm9.out

192.168.56.108: starting nodemanager, logging to /home/hadoop/hadoop-2.8.1/logs/yarn-root-nodemanager-vm8.out

192.168.56.107: starting nodemanager, logging to /home/hadoop/hadoop-2.8.1/logs/yarn-root-nodemanager-vm7.out