HDFS

概念介绍

TODO

配置

设置全局配置文件core-site.xml

<configuration>

<property>

<!-- 设置 NameNode 节点地址 -->

<name>fs.defaultFS</name>

<value>hdfs://192.168.56.101:9000</value>

</property>

<!-- 临时文件目录(TODO,中间结果是否保存在临时文件中?) -->

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hadoop/tmp</value>

</property>

</configuration>

本例中只使用了能让 Hadoop 集群运行起来的最小配置,更多关于 core-site.xml 的配置项可以参考 https://hadoop.apache.org/docs/r2.8.0/hadoop-project-dist/hadoop-common/core-default.xml。

配置 hdfs-site.xml,修改HDFS文件系统的对应参数:

<configuration>

<!-- 设置 namenode 数据存放的位置,可以设置多个目录,主要是用于备份,生产环境中,一台服务器往往有多个磁盘,设置多个目录主要是为了数据的备份,避免由于磁盘损坏造成的数据丢失。多个目录用“,”分割,各个目录中存放内容完全一样,主要() -->

<property>

<name>dfs.namenode.name.dir</name>

<value>/home/hadoop/dfs/namenode,/mnt/disk2/dfs/namenode</value>

</property>

<property>

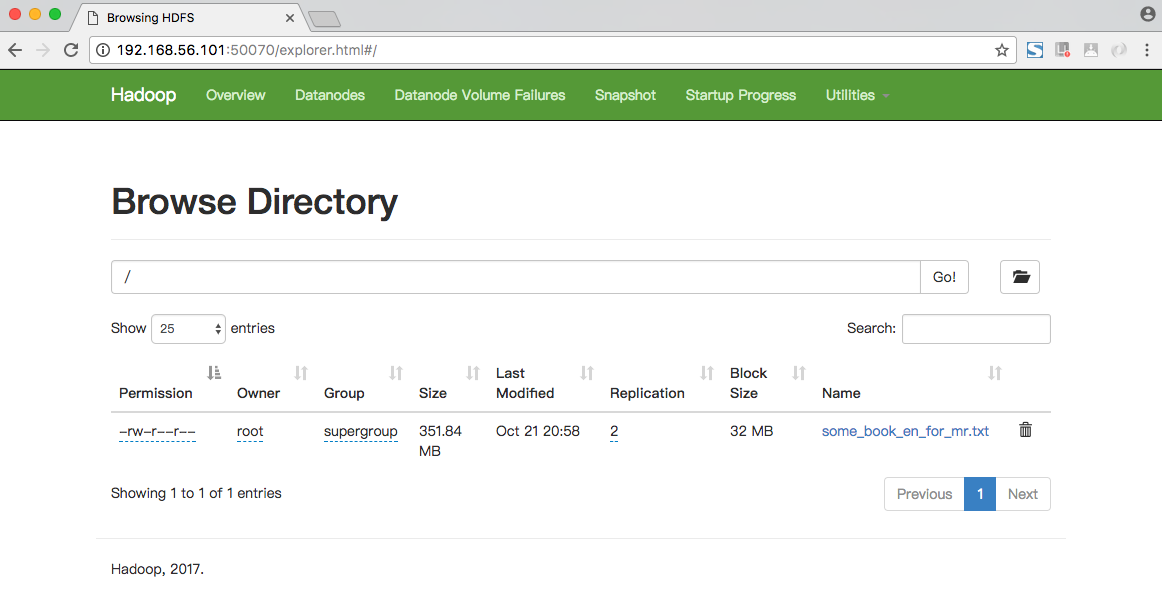

<!-- 冗余副本数量 -->

<name>dfs.replication</name>

<value>2</value>

</property>

<!-- hadoop2 默认 128m-->

<property>

<name>dfs.blocksize</name>

<value>32m</value>

</property>

<!-- Namenode web管理界面地址 -->

<property>

<name>dfs.http.address</name>

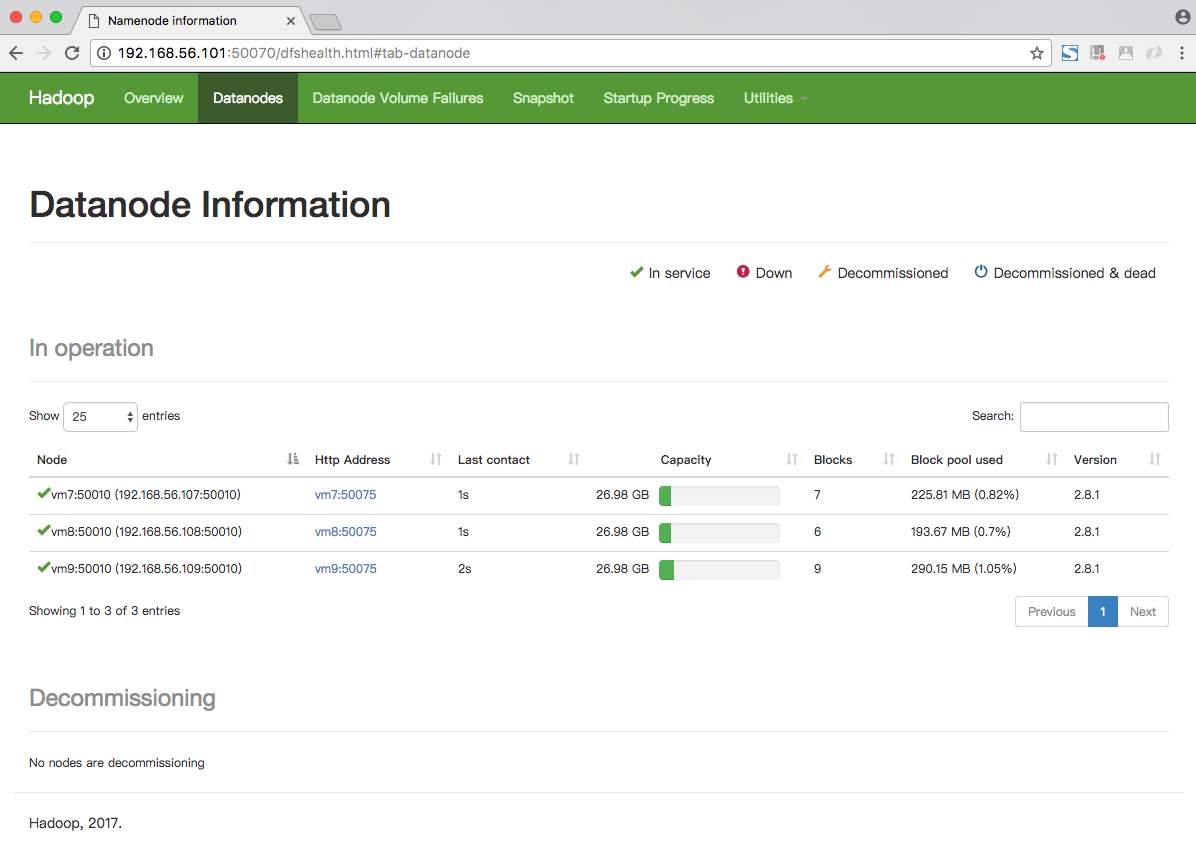

<value>192.168.56.101:50070</value>

</property>

</configuration>

TODO

更多关于 hdfs-site.xml 配置可以参考 https://hadoop.apache.org/docs/r2.8.0/hadoop-project-dist/hadoop-hdfs/hdfs-default.xml。

重启

$ ./sbin/start-dfs.sh (TODO) Starting namenodes on [vm1] vm1: namenode running as process 21796. Stop it first. 192.168.56.109: datanode running as process 8196. Stop it first. 192.168.56.107: datanode running as process 8755. Stop it first. 192.168.56.108: datanode running as process 8426. Stop it first. Starting secondary namenodes [0.0.0.0] 0.0.0.0: secondarynamenode running as process 22007. Stop it first.

TODO